Radium Selects VDURA to Drive Next-Generation AI Infrastructure with Seamless Scalability and Ease of Use

VDURA Launches All-Flash NVMe V5000 to Power AI Factories and GPU Clouds with Unmatched Performance, Scale, and Reliability

Derrick Shannon

Derrick.Shannon@touchdownpr.com

VDURA, a leader in AI and high-performance computing data infrastructure, today announced the launch of its V5000 All-Flash Applian

ce, engineered to address the escalating demands as AI pipelines and generative AI models move into production. Built on the revolutionary VDURA V11 data platform, the system delivers GPU-saturating throughput while ensuring the durability and availability of data for 24x7x365 operating conditions, setting a new benchmark for AI infrastructure scalability and reliability.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250304386031/en/

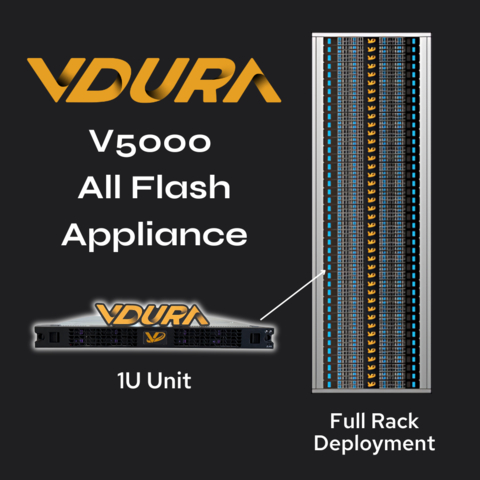

VDURA announced the launch of its V5000 All-Flash Appliance, engineered to address the escalating demands as AI pipelines and generative AI models move into production. (Photo: Business Wire)

VDURA V11 Software is now available on the new 'F Node,' a modular 1U platform with Intelligent Client-Side Erasure Coding—delivering over 1.5PB per rack unit with seamless scalability and reliability.

Effortless Scalability for AI Workloads

Unlike traditional AI storage solutions that require overprovisioning, VDURA enables AI service providers to expand dynamically, scaling from a few nodes to thousands with zero downtime. As GPU clusters grow, V5000 storage nodes can be seamlessly added—instantly increasing capacity and performance without disruption. By combining the V5000 All-Flash with the V5000 Hybrid, VDURA delivers a unified, high-performance data infrastructure supporting every stage of the AI pipeline—from high-speed model training to long-term data retention.

Designed for AI at Scale: Performance, Density & Efficiency

The VDURA Data Platform’s parallel file system (PFS) architecture is engineered for AI, eliminating bottlenecks caused by high-frequency checkpointing. VDURA’s Intelligent Client architecture ensures AI storage remains high-performance and reliable at scale, implementing lightweight client-side erasure coding without adding unnecessary CPU overhead—unlike alternative solutions that impose heavy compute demands.

By combining client-side erasure coding, RDMA acceleration, and flash-optimization handling, the V5000 sustains AI workloads at any scale, delivering:

- Seamless AI Storage Expansion – Scale effortlessly as GPU clusters grow, with no downtime or overprovisioning.

- AI Checkpoint Optimization – Eliminates write bottlenecks that slow AI training.

- Data Center Efficiency – 1.5PB+ per U, reducing power, cooling, and footprint costs.

- RDMA Acceleration – Architected for NVIDIA GPU Direct and RDMA, with optimizations rolling out later this year.

“The V5000 All-Flash Appliance, powered by our next-gen V11 Data Platform, delivers not only the peak performance enterprises expect—but while high performance is necessary, it is not enough," said Ken Claffey, CEO of VDURA. "AI workloads demand sustained high performance and unwavering reliability. That’s why we’ve engineered the V5000 to not just hit top speeds, but to sustain them—even in the face of hardware failures—ensuring every terabyte of data fuels innovation, not inefficiencies or downtime.”

Radium Selects VDURA to Power Massive-Scale AI Training

As AI models grew in complexity, Radium, an NVIDIA Cloud Partner, required a co-located storage solution that could scale dynamically with their expanding GPU infrastructure—without increasing data center footprint or energy consumption. After evaluating multiple AI storage solutions, Radium selected VDURA for its unique combination of performance, efficiency, and seamless scalability.

The partnership underscores VDURA’s ability to deliver:

- Sustained AI Performance – Full-bandwidth data access for NVIDIA H100 GPU and NVIDIA GH200 Grace Hopper Superchips.

- Modular Scaling – Storage grows in sync with AI compute, eliminating overprovisioning.

- Data Center Optimization – VDURA’s 1U design delivers industry-leading density and efficiency.

“Our enterprise customers demand robust AI infrastructure with uncompromising performance and uptime,” said Adam Hendin, CEO and Co-Founder of Radium. “Through our partnership with VDURA, we’re demonstrating how Radium’s enterprise AI platform delivers the scalability and speed needed for production AI workloads, while providing the enterprise-grade reliability required to meet demanding SLAs—ensuring organizations can run consistent, high-performance AI operations with confidence.”

VDURA is Redefining AI Infrastructure with Seamless Scalability

With AI adoption accelerating, VDURA is setting the new standard for AI-scale data performance. The V5000 All-Flash and V5000 Hybrid, powered by the VDURA V11 Data Platform, provide an end-to-end solution for AI workloads—ensuring performance, scalability, and efficiency without compromise.

Key Features:

- AI-Optimized Performance – Linear performance scalability designed for high-bandwidth AI workloads.

- Zero-Downtime Scaling – Storage expands in real-time as AI compute clusters grow.

- Breakthrough AI Metadata Performance – Powered by VeLO, VDURA’s next-gen metadata engine with intelligent metadata handling that continues our proven Metadata Triplication ensures sustained performance and rapid recovery, even during hardware failures.

- Future-Proof Infrastructure – RDMA & NVIDIA GPU Direct optimizations planned for rollout later this year.

F Node: The Power Behind VDURA V11 All Flash

The F Node delivers extreme performance and efficiency in a compact 1U form factor, designed for AI-scale workloads with:

- Three PCIe and one OCP Gen 5 slots for high-speed front-end and back-end expansion connectivity.

- NVIDIA ConnectX-7 InfiniBand NICs for ultra-low latency data transfer.

- 1x AMD EPYC 9005 Series, 384GB of memory.

- Up to 12 U.2 128TB NVMe SSDs—providing over 1.5PB per rack unit.

Availability

The VDURA V5000 All-Flash Appliance is available now for customer evaluation and qualification, with early deployments already underway in leading AI data centers. VDURA is actively working with AI service providers to optimize real-world performance and scalability ahead of broader availability. General availability is planned for later this year, ensuring customers can scale AI infrastructure with confidence.

Supporting Quotes:

Matt Ritter, VP of Engineering and Product, SourceCode

"SourceCode selected the VDURA Data Platform and V5000 Appliances for the Atlas AI Ignite and Atlas AI Factory Cluster systems to enable seamless scale from proof-of-concept to exascale. With industry-leading IOPS, flexible deployment, and robust data protection, VDURA helps ensure SLAs and simplify integration. The SourceCode-VDURA collaboration helps customers realize the business benefits of AI."

Industry Analyst:

Steve McDowell, Chief Analyst, NAND Research

"As AI transitions from experimental projects to production-scale operations, infrastructure bottlenecks are becoming the primary barrier to innovation. VDURA's V5000 All-Flash Appliance tackles this challenge head-on by redefining what enterprises should expect from AI storage. Traditional solutions force a trade-off between performance, scalability, and reliability – but VDURA demonstrates that these compromises are no longer necessary. VDURA isn’t just keeping pace with AI’s growth; it’s future-proofing data infrastructure."

About VDURA

VDURA is a leader in AI and HPC data infrastructure, delivering high-performance, scalable storage solutions that power the world’s most demanding AI applications. For more information, visit www.vdura.com.

About Radium

Radium is a next-generation AI cloud platform designed for enterprises, providing seamless model training, fine-tuning, and deployment with high performance infrastructure. For more information, visit www.radium.cloud.

View source version on businesswire.com: https://www.businesswire.com/news/home/20250304386031/en/

Business wire

Business wire

Add Comment